How can quantum computing accelerate machine learning model training?

How can quantum computing accelerate machine learning model training?

by Maximilian 10:10am Feb 01, 2025

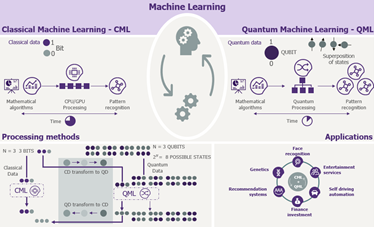

Quantum computing has the potential to significantly accelerate machine learning (ML) model training by exploiting quantum mechanical phenomena like superposition and entanglement, which enable quantum computers to process and analyze vast amounts of data in parallel. While quantum computing is still in its early stages, its potential for speeding up ML training and enhancing model performance is an area of great interest. Here’s how quantum computing could accelerate machine learning model training:

1. Quantum Speedup in Linear Algebra Operations

Description: A significant portion of machine learning involves linear algebra operations, such as matrix multiplication, eigenvalue decomposition, and singular value decomposition (SVD), which are fundamental in many algorithms (e.g., linear regression, principal component analysis).

How it helps: Quantum algorithms, like Harrow-Hassidim-Lloyd (HHL) and Quantum Singular Value Decomposition (QSVD), can perform certain linear algebra tasks exponentially faster than classical algorithms. For example, quantum computing can speed up the solving of systems of linear equations, which is a key operation in training linear models or optimization tasks in deep learning.

Impact on ML: This speedup can accelerate training times for models like Support Vector Machines (SVMs) and principal component analysis (PCA), which rely heavily on matrix operations. Training these models on large datasets would become much faster.

2. Quantum-enhanced Optimization

Description: Machine learning involves optimizing objective functions, which can be computationally expensive. Quantum computing can help optimize these functions more efficiently using quantum algorithms.

How it helps: Quantum optimization algorithms, such as Quantum Approximate Optimization Algorithm (QAOA), can potentially offer exponential speedup over classical methods in solving combinatorial optimization problems. For example, in training deep neural networks, the optimization of weights (e.g., using gradient descent) is a key bottleneck, and quantum computing could speed up this process, reducing training times significantly.

Impact on ML: Quantum optimization could make it feasible to train very large models with vast amounts of parameters, thus improving the scalability of machine learning applications.

3. Quantum Machine Learning Algorithms

Description: Quantum machine learning (QML) algorithms are designed to run on quantum computers and exploit quantum properties to improve performance. These algorithms can potentially solve certain machine learning tasks faster than classical algorithms.

How it helps: Quantum algorithms like Quantum Support Vector Machines (QSVM), Quantum k-Nearest Neighbors (QkNN), and Quantum Neural Networks (QNNs) leverage quantum states to perform computations on large datasets more efficiently. These algorithms take advantage of quantum parallelism to explore multiple potential solutions simultaneously, drastically speeding up the learning process.

Impact on ML: QML algorithms can handle complex, high-dimensional data more efficiently. For example, in classification tasks, a quantum SVM might be able to process data much faster than a classical SVM, providing faster results in training and inference.

4. Quantum Parallelism for Faster Data Processing

Description: Quantum computers can process exponentially large numbers of possibilities simultaneously through a concept called superposition. This enables them to explore a wide range of potential solutions in parallel.

How it helps: Quantum parallelism can be used to process multiple data points or combinations of parameters at once, significantly speeding up tasks like data fitting, feature selection, or training on large datasets. Classical systems typically process each solution sequentially, whereas quantum computers can evaluate many possibilities in a single operation.

Impact on ML: In training machine learning models, particularly deep learning models with numerous layers and parameters, quantum parallelism could reduce the number of iterations or time required to converge to an optimal solution.

5. Quantum Feature Spaces for Better Generalization

Description: In machine learning, the representation of data in feature space is critical for learning effective patterns. Quantum computing can help represent data in high-dimensional feature spaces that are not efficiently accessible using classical methods.

How it helps: Quantum computers can map data points into high-dimensional quantum states (e.g., using quantum kernels), allowing for more complex decision boundaries and better classification in tasks that are hard for classical systems. This allows quantum systems to learn better representations of the data, improving generalization.

Impact on ML: Quantum-enhanced feature spaces could lead to better performance on tasks that involve complex or unstructured data (e.g., images, speech, or text), allowing quantum algorithms to outperform classical models in terms of accuracy, even with limited data.

6. Quantum Data Encoding

Description: Quantum computers can potentially encode large datasets more efficiently, leveraging quantum states to represent multiple classical data points simultaneously.

How it helps: In classical machine learning, large datasets often require significant computational resources to process and analyze. Quantum data encoding allows quantum systems to efficiently represent and manipulate complex datasets, making it possible to work with much larger datasets or more complex features in less time.

Impact on ML: For example, when training deep learning models on large-scale image or genomic data, quantum computing could potentially reduce the time needed for data processing and training. This is especially helpful when working with datasets that are too large to fit into classical memory or processing capabilities.

7. Quantum-Enhanced Sampling and Uncertainty Estimation

Description: Many machine learning algorithms rely on sampling methods to estimate distributions or optimize objective functions, and quantum computers can enhance this process.

How it helps: Quantum algorithms, like Quantum Monte Carlo (QMC) methods, can provide faster sampling techniques for high-dimensional distributions. Quantum computers could also be used to estimate uncertainties in model predictions more efficiently, which is crucial in applications like reinforcement learning or Bayesian networks.

Impact on ML: In reinforcement learning, quantum-enhanced sampling could accelerate the exploration of the state-action space, speeding up the learning process and improving model accuracy by enabling faster adaptation to complex environments.

8. Quantum Boltzmann Machines and Quantum Neural Networks

Description: Quantum Boltzmann Machines (QBMs) and Quantum Neural Networks (QNNs) are quantum analogs of classical neural networks and generative models. They take advantage of quantum states to perform more efficient computations during training.

How it helps: Quantum Boltzmann Machines and QNNs can leverage quantum entanglement and superposition to model complex distributions and learn patterns in data more efficiently. These quantum models are designed to exploit the parallelism inherent in quantum systems to update parameters faster than their classical counterparts.

Impact on ML: These models could drastically reduce training times for generative tasks (e.g., generative adversarial networks or GANs) and unsupervised learning, as quantum computing can potentially handle the exponentially large parameter spaces more effectively than classical systems.

Challenges and Future Outlook

While quantum computing holds great promise for accelerating machine learning model training, there are several challenges that need to be addressed:

Noisy Intermediate-Scale Quantum (NISQ) Devices: Current quantum computers are still in the NISQ era, meaning they are prone to errors and limited in the number of qubits they can handle. Practical quantum computing for ML will require error-correction techniques and more stable, scalable quantum processors.

Quantum-Classical Hybrid Models: For the near term, quantum computing may be most effective in hybrid systems, where quantum computers are used for certain tasks (e.g., optimization, sampling), while classical computers handle other parts of the machine learning pipeline.

Conclusion

Quantum computing has the potential to revolutionize machine learning by significantly speeding up key operations such as optimization, linear algebra, and data processing. Techniques such as quantum optimization, quantum-enhanced feature spaces, and quantum parallelism can accelerate training times, improve model performance, and handle larger datasets more efficiently. However, the full potential of quantum machine learning will depend on the development of more advanced quantum hardware and error-correction methods in the coming years.