What are the potential limitations of robotics in mental health treatment?

What are the potential limitations of robotics in mental health treatment?

by Nathaniel 10:37am Feb 01, 2025

What are the potential limitations of robotics in mental health treatment?

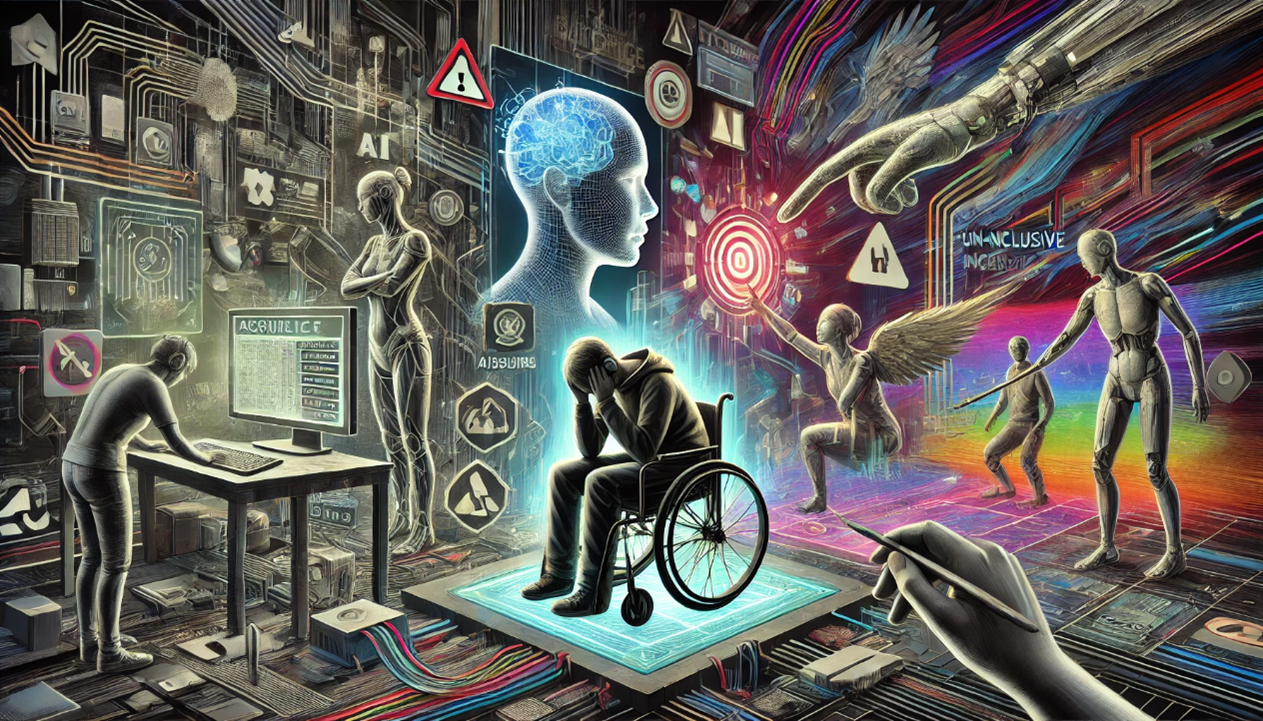

While robotics has the potential to transform mental health treatment by offering innovative solutions for therapy, diagnosis, and support, there are several limitations to its application in this field. The integration of robotics into mental health care, especially when paired with artificial intelligence (AI), presents unique challenges that need to be addressed to ensure effectiveness, safety, and ethical responsibility. Below are some of the key potential limitations of robotics in mental health treatment:

1. Lack of Human Empathy and Emotional Understanding

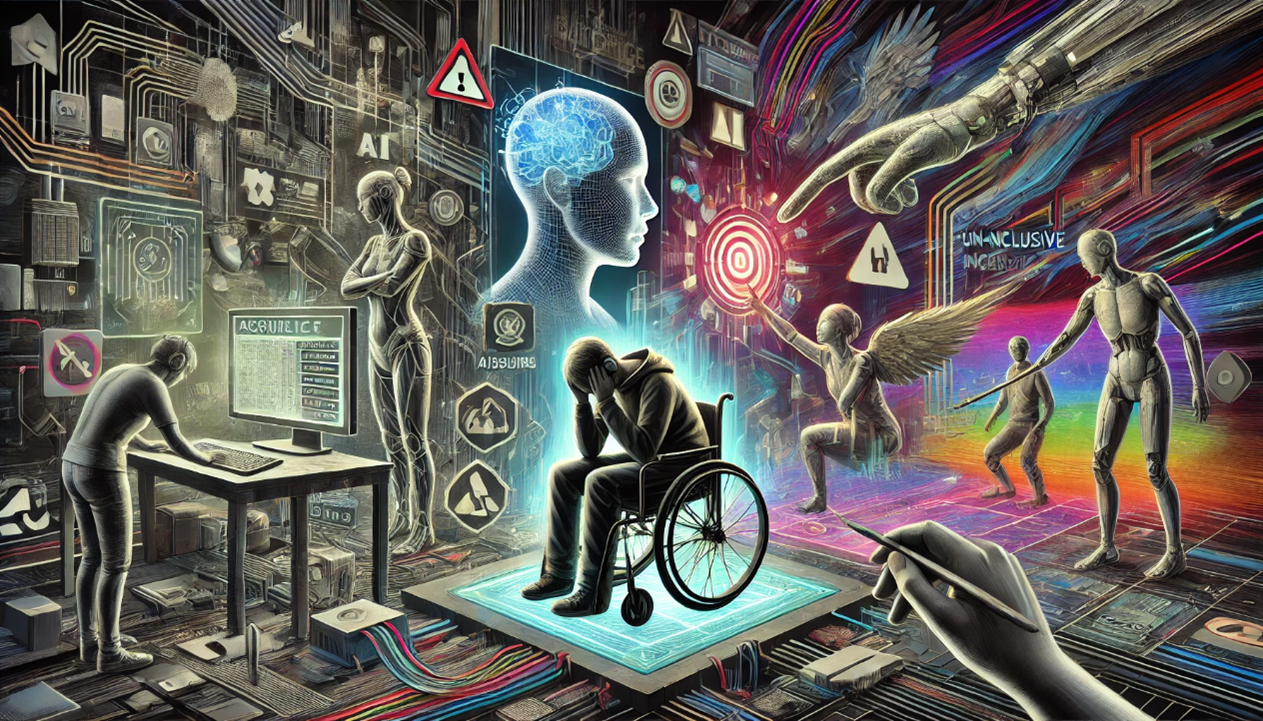

One of the most significant limitations of robotics in mental health treatment is the absence of genuine human empathy, which is crucial in many therapeutic settings. Mental health professionals, particularly psychologists and psychiatrists, rely heavily on emotional intelligence to understand the complexities of a patient's experiences and emotions.

Emotional Detachment:Robots, even if they are designed to recognize and respond to emotional cues, cannot truly understand human emotions or offer the deep emotional connection that a human therapist can provide. The subtle nuances of human emotions, including body language, tone of voice, and unspoken feelings,are difficult for robots to interpret accurately.

Therapeutic Relationship: Building a trusting relationship between a patient and therapist is often a cornerstone of successful therapy. While robotic systems can simulate certain aspects of conversation and empathy, the absence of a real human presence may undermine the therapeutic alliance,which is crucial for effective treatment, especially in conditions such as depression, anxiety, and trauma.

2. Ethical and Privacy Concerns

The integration of robotics and AI in mental health care raises important ethical questions, especially regarding privacy, confidentiality, and the handling of sensitive data.

Confidentiality:Mental health treatment involves the sharing of deeply personal information, and patients need to feel confident that their data is protected. The use of robots in therapy could raise concerns about data security, particularly if sensitive personal and emotional data is stored, shared, or processed by third-party organizations.

Data Usage and Consent: There are concerns about how patient data is collected, used, and shared. Patients may not fully understand how their data is being handled by robots or AI systems, which could lead to mistrust and reluctance to engage with these technologies.

Bias in AI Models:AI systems used in mental health robots may inherit biases from the data they are trained on. These biases could influence the way robots respond to different demographic groups, leading to unequal treatment or the reinforcement of harmful stereotypes. Ensuring that AI models are developed with fairness and inclusivity in mind is critical.

3. Limited Ability to Address Complex Mental Health Conditions

Mental health conditions are often complex, multifaceted, and deeply personal. Robots, even with sophisticated AI capabilities, may struggle to effectively address conditions that require nuanced, individualized care.

Complex Diagnosis and Treatment: Mental health diagnoses often involve subjective assessments and are influenced by a patient’s cultural background,history, and personal experiences. Robots may not be equipped to take all of these factors into account. For example, diagnosing conditions like depression, bipolar disorder, or PTSD involves understanding a person’s life story, emotional experiences, and social context something that AI-based systems are currently not equipped to handle fully.

Treatment Flexibility:Therapy for mental health conditions often requires a flexible, adaptive approach, as treatment plans may need to evolve based on patient progress, changing life circumstances, or unforeseen challenges. AI-powered robots may struggle with this adaptability and could potentially follow rigid, algorithmic approaches that don't take into account the full complexity of a patient’s situation.

4. Risk of Over-Reliance and Displacement of Human Therapists

Another potential limitation is the risk of over-reliance on robotics and AI in mental health treatment, leading to reduced human involvement in care.

Dehumanization of Care: If robots are used excessively, there is a concern that patients may lose out on the emotional support and understanding that only a human therapist can provide. Human connection plays a critical role in healing and recovery, especially for individuals with severe mental health conditions.

Displacement of Mental Health Professionals: The increased use of robotics in mental health could reduce the demand for human therapists, particularly in lower-resource settings or where robots are marketed as more cost-effective alternatives. While robots can enhance the efficiency of mental health services, they should be viewed as tools to complement, rather than replace, human practitioners.

Loss of Human Judgment: Robots, even with sophisticated AI, lack the nuanced judgment that experienced mental health professionals bring to treatment.For instance, a human therapist can discern subtle emotional changes in a patient, recognize the need for crisis intervention, and provide immediate support, whereas robots may not be able to respond with the same level of judgment and care.

5. Technical Limitations and Accessibility Issues

There are several technical limitations and accessibility barriers that could hinder the widespread use of robotic systems in mental health treatment.

Technological Barriers: Robotics and AI in mental health care are still in the early stages of development, and there are many technical challenges to overcome. These include issues such as poor voice recognition, difficulty understanding emotional cues, and a lack of adaptability to different patient needs. While AI has made significant strides, it may still struggle to fully replicate human-like interactions in a therapeutic context.

Cost and Access:The use of robotics and AI systems in mental health care can be expensive, both in terms of development and implementation. These technologies may be inaccessible to lower-income patients or those living in rural areas where mental health services are already scarce. Additionally, the infrastructure required to support AI-driven robotic systems may not be available in all healthcare settings.

Technical Malfunctions: Like any technology, robotic systems can malfunction or fail. This could result in missed diagnoses, inappropriate treatment plans, or the failure to provide timely intervention, which is particularly critical in mental health care where patient well-being is at stake.

6. Social and Psychological Impact

The introduction of robots into the realm of mental health treatment also raises potential concerns about social and psychological effects on patients.

Stigma and Reluctance to Engage: Some individuals may be uncomfortable with the idea of receiving therapy or support from a robot, viewing it as less legitimate or less effective than human therapy. This could contribute to stigma surrounding the use of technology in mental health care and make patients hesitant to embrace robotic treatment options.

Impact on Human Relationships: For patients with mental health conditions,particularly those that involve social isolation (e.g., depression,anxiety), human relationships are critical for recovery. Excessive reliance on robots for support could potentially reinforce feelings of loneliness and hinder the development of real-world relationships, which are vital to emotional healing.

7. Limited Scope of Treatment

Robotic systems in mental health are currently limited in the types of conditions they can effectively address. While AI and robots may be useful in providing basic support, delivering cognitive behavioral therapy (CBT), or guiding patients through relaxation exercises, they are not yet capable of addressing the full range of mental health needs.

Complex Therapies:Mental health treatments like psychotherapy, which require deep emotional exploration and the ability to process complex emotions, may not be well-suited for robotic intervention. These therapies rely heavily on the patient-therapist relationship, which robots cannot fully replicate.

Therapeutic Flexibility: Mental health conditions are diverse, and individuals respond differently to various treatment approaches. Robots are typically limited to programmed responses and are not yet equipped to adjust therapies dynamically based on the evolving needs of patients.

Conclusion

While robotics has the potential to bring new and innovative approaches to mental health care, several limitations must be carefully considered. The lack of true empathy, ethical concerns, technical barriers, and the risk of over-reliance on AI-based systems are all challenges that need to be addressed. Ultimately, AI and robotics should be viewed as tools to augment human mental health professionals rather than replace them. The most effective approach to mental health treatment may lie in combining human expertise with robotic assistance, ensuring that the compassionate, nuanced care of human therapists is complemented by the precision and efficiency of technology.