What techniques are most promising in achieving interpretable deep learning models?

What techniques are most promising in achieving interpretable deep learning models?

by Maximilian 10:47am Feb 01, 2025

Interpretable deep learning models are crucial for enhancing trust, transparency, and accountability in AI systems. Several promising techniques and approaches have emerged to address the inherent "black-box" nature of deep learning. These techniques aim to make model predictions and decision-making processes understandable to humans.

1. Model-Agnostic Techniques

These methods work across different types of deep learning models without requiring modifications to the architecture.

a. Local Interpretable Model-agnostic Explanations (LIME)

How it works: LIME generates explanations for individual predictions by approximating the complex model with a simpler, interpretable one (e.g., linear regression) locally around the input.

Advantages: Can explain any model and provides insights at the instance level.

Limitations: Computationally expensive and sensitive to perturbations in data.

b. Shapley Additive Explanations (SHAP)

How it works: SHAP assigns importance scores to input features based on their contribution to the model's output, using concepts from cooperative game theory.

Advantages: Offers consistent and theoretically grounded feature importance scores.

Limitations: High computational cost for complex models with many features.

2. Intrinsic Interpretability

These methods involve designing models that are inherently interpretable without requiring post-hoc explanations.

a. Attention Mechanisms

How it works: Attention weights in models like transformers highlight which parts of the input data the model focuses on when making decisions.

Advantages: Provides natural interpretability for tasks like text classification and image captioning.

Limitations: Attention weights do not always correlate perfectly with interpretability.

b. Sparse Models

How it works: Encourage sparsity in model parameters or feature selection, reducing the number of factors the model relies on.

Advantages: Simplifies the model, making it easier to understand which inputs drive decisions.

Limitations: May sacrifice accuracy for interpretability.

c. Rule-Based Deep Learning

How it works: Combines deep learning with rule-based systems to produce human-readable decision rules (e.g., neural-symbolic systems).

Advantages: Produces clear, logical outputs that can be easily interpreted.

Limitations: Rule-based constraints can limit model flexibility.

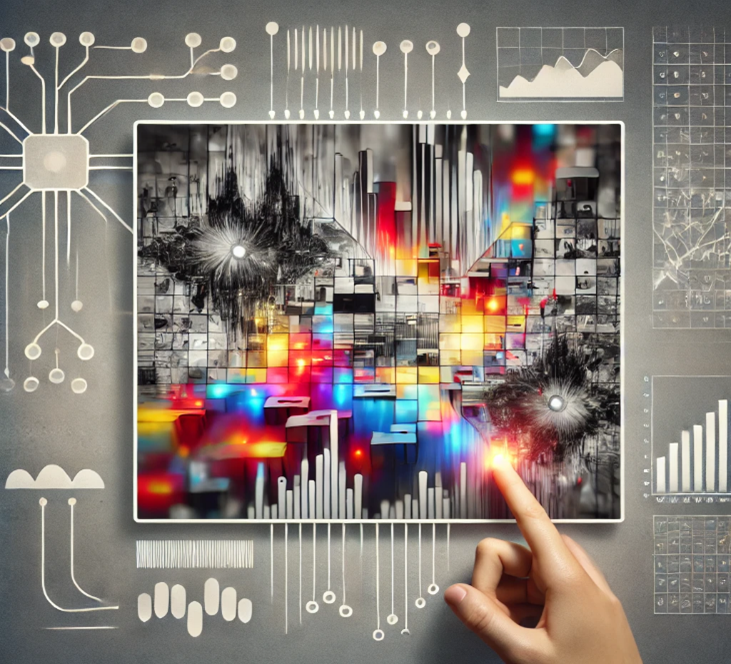

3. Visualization Techniques

Visualization methods help interpret deep learning models by providing insights into their inner workings.

a. Saliency Maps

How it works: Highlight regions of input (e.g., pixels in an image) that most influence the model's prediction by computing gradients.

Advantages:Useful for tasks like image recognition.

Limitations: Sensitive to noise and may not capture higher-order dependencies.

b. Feature Attribution

Examples: Grad-CAM (Gradient-weighted Class Activation Mapping), Integrated Gradients.

How it works: Highlight features that contribute most to a prediction by analyzing gradients or activations in specific layers.

Advantages: Intuitive for visualizing contributions of input features in convolutional neural networks.

Limitations: Can be challenging to scale to very deep or complex models.

4. Counterfactual Explanations

How it works: Provide examples of minimal changes to the input that would lead to a different output (e.g., "If feature X were increased by 10%, the prediction would change from A to B").

Advantages: Intuitive for end-users and highlights model sensitivity.

Limitations: Computationally intensive for high-dimensional input spaces.

5. Concept-Based Interpretability

How it works: Break down the model's decisions into human-understandable concepts. For example, in image recognition, analyze whether the model associates certain features with specific high-level concepts (e.g., "stripes" for zebras).

Example: TCAV (Testing with Concept Activation Vectors) evaluates how much a model's output is influenced by specific concepts.

Advantages: Bridges the gap between model logic and human reasoning.

Limitations: Requires labeled data or predefined concepts.

6. Neural Network Disentanglement

How it works: Train models to disentangle representations so that each dimension corresponds to a distinct interpretable factor.

Advantages: Promotes structured and interpretable latent spaces.

Limitations: Requires specialized training techniques.

Challenges in Achieving Interpretability

Trade-off with Performance: Some interpretable models may underperform compared to black-box counterparts.

Complexity of Deep Models: High-dimensional and nonlinear structures are inherently difficult to interpret.

Context-Dependent Interpretability: Different stakeholders (e.g., developers vs. end-users) require varying levels of explanation detail.

Scalability: Many interpretability techniques struggle to scale with model size and dataset complexity.

Future Directions

Development of hybrid methods combining multiple interpretability techniques.

Incorporating domain-specific knowledge for better contextual explanations.

Standardization of metrics and benchmarks for evaluating interpretability.

Advances in explainable AI frameworks tailored for specific deep learning architectures, such as transformers or generative models.

By integrating these techniques, researchers and practitioners can build deep learning models that are not only powerful but also transparent and fair, fostering trust in AI systems.